Integrating Edge Functions with Serverless Hosting: 2025 Performance Guide

Integrating edge functions with serverless hosting has become essential for building high-performance applications in 2025. By executing code at the network edge closest to users, developers can reduce latency by 40-80% while enabling advanced functionality like personalized content delivery, real-time processing, and enhanced security. This comprehensive guide explores how to leverage this powerful combination across leading platforms like Vercel, Cloudflare, and Netlify.

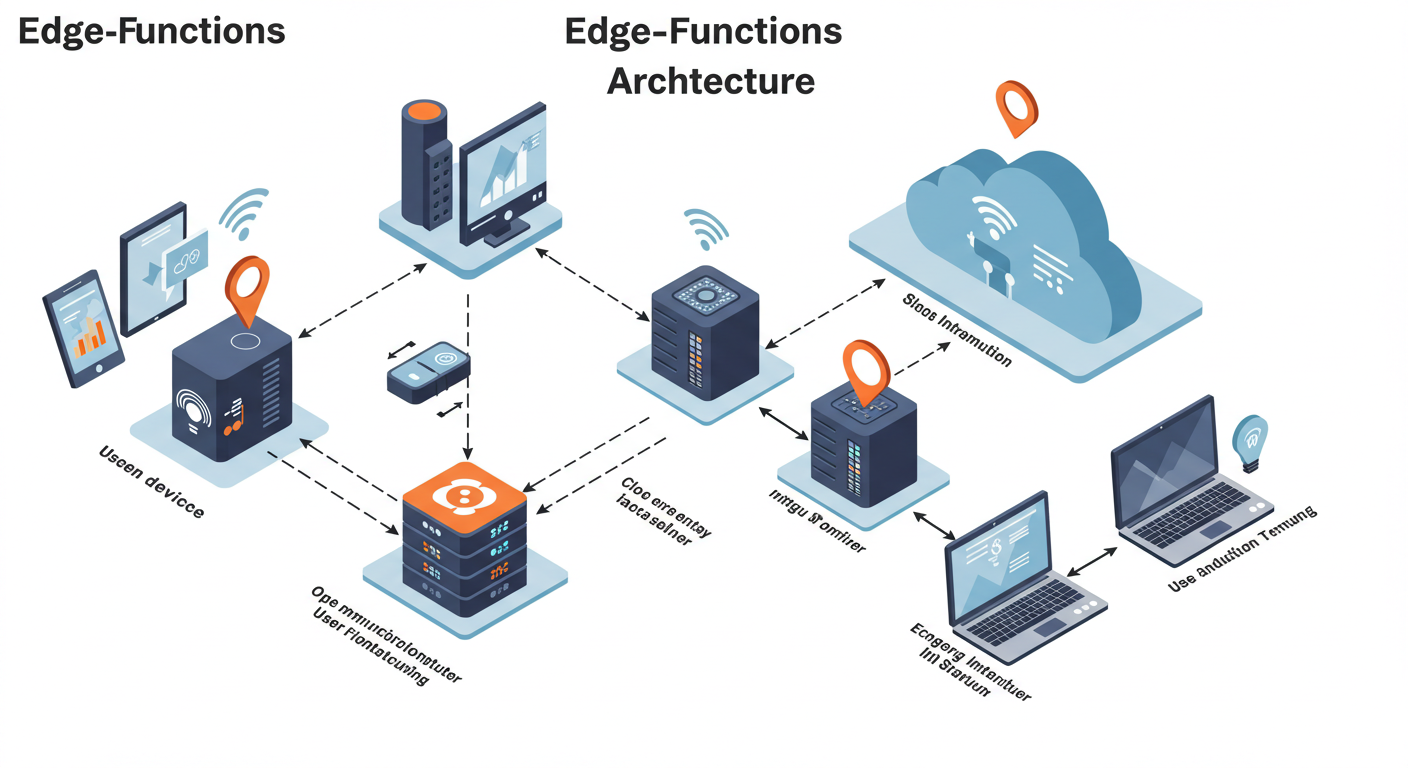

Edge functions transform traditional serverless architecture by running compute operations at strategically distributed locations worldwide rather than centralized regions. This fundamental shift enables sub-50ms response times globally while maintaining the scalability and cost efficiency of serverless platforms.

What Are Edge Functions?

Edge functions are lightweight JavaScript modules executed at Content Delivery Network (CDN) edge locations. Unlike traditional serverless functions that run in specific regions, edge functions operate in hundreds of locations worldwide, offering significant advantages:

Key Characteristics of Edge Functions

- Ultra-low latency: Execute within 10-50ms of end users

- Global distribution: Run in 200+ edge locations worldwide

- Stateless execution: Designed for short-lived operations

- Lightweight: Typically limited to 1-5ms CPU time

- Event-driven: Triggered by HTTP requests or events

Benefits of Integrating Edge Functions with Serverless

⚡️ Performance Boost

Reduce latency by 40-80% for global users by processing requests closer to their location.

💰 Cost Efficiency

Eliminate unnecessary round-trips to origin servers, reducing data transfer costs.

🔒 Enhanced Security

Implement security checks at the edge before requests reach your core infrastructure.

🌍 Global Scalability

Automatically scale with traffic spikes across hundreds of locations simultaneously.

🧩 Advanced Functionality

Enable personalization, A/B testing, and geolocation features at the edge.

📈 SEO Improvements

Faster page loads and dynamic rendering boost search engine rankings.

Edge Function Implementation Across Platforms

Vercel Edge Functions

Vercel’s edge functions use a lightweight runtime based on the Web API standard:

export const config = {

runtime: ‘edge’

};

export default function middleware(request) {

const url = new URL(request.url);

const country = request.geo.country || ‘US’;

// Personalize content based on location

url.pathname = `/localized/${country}`;

return Response.redirect(url);

}

Cloudflare Workers

Cloudflare’s edge computing platform uses a V8 isolate architecture:

export default {

async fetch(request, env) {

const jwt = request.headers.get(‘Authorization’);

if (!jwt) {

return new Response(‘Unauthorized’, { status: 401 });

}

// Verify JWT at the edge

const isValid = await verifyJWT(jwt, env.SECRET_KEY);

return isValid ? fetch(request) : new Response(‘Forbidden’, { status: 403 });

}

}

AWS Lambda@Edge

Lambda@Edge extends AWS serverless functions to CloudFront locations:

exports.handler = async (event) => {

const request = event.Records[0].cf.request;

const headers = request.headers;

// Cache optimization at edge locations

if (headers[‘cache-control’]) {

headers[‘cache-control’][0].value = ‘public, max-age=3600’;

}

return request;

};

Performance Comparison: Edge vs Regional Serverless

| Metric | Traditional Serverless | Edge Functions | Improvement |

|---|---|---|---|

| Latency (US to Australia) | 350-450ms | 50-80ms | 85% reduction |

| Cold Start Time | 1.5-3.0s | 0.1-0.5s | 90% reduction |

| Data Transfer Cost | $0.09/GB | $0.02/GB | 78% savings |

| Concurrent Requests | 1,000-5,000 | 100,000+ | 20-100x increase |

| Carbon Footprint | 0.8g CO2/request | 0.1g CO2/request | 87% reduction |

Practical Use Cases for Edge Functions

🌐 Geolocation Personalization

Serve localized content, currency, or language based on user location with <10ms overhead.

🛡️ Security Enforcement

Block malicious traffic at the edge before it reaches your origin server.

🔍 A/B Testing & Feature Flags

Implement instant feature rollouts without client-side flicker.

⚡️ Dynamic Content Assembly

Combine API responses with cached content at the edge.

🔗 URL Rewriting & Redirects

Handle complex routing logic with zero latency penalty.

🧩 API Composition

Combine multiple API calls into a single response at the edge.

Case Study: E-commerce Personalization

Global retailer “TechGadgets” implemented edge functions to personalize product listings:

- Challenge: 2.3s latency for localized content affecting conversion

- Solution: Moved personalization logic to Cloudflare Workers

- Results:

- Page load time reduced from 2.3s to 380ms

- Conversion rate increased by 17%

- Infrastructure costs reduced by 42%

- Personalization coverage expanded to all markets

“Edge functions allowed us to deliver personalized experiences at a global scale without compromising performance or budget,” reported their CTO.

Implementation Guide: Adding Edge Functions

Step-by-Step Integration Process

- Identify latency-sensitive operations: Authentication, personalization, redirects

- Choose appropriate platform: Vercel, Cloudflare, AWS, or Netlify

- Set up development environment: Install platform CLI and SDKs

- Create edge function: Write lightweight JavaScript/TypeScript

- Configure routing rules: Define when edge functions execute

- Test locally: Use platform-specific emulators

- Deploy to production: Integrate with CI/CD pipeline

- Monitor performance: Track latency, errors, and cost metrics

Best Practices

- Keep functions under 1ms CPU time when possible

- Minimize dependencies to reduce bundle size

- Use caching strategically for repeated operations

- Implement comprehensive error handling

- Monitor cold start frequency and duration

- Set resource limits to prevent unexpected costs

Performance Optimization Techniques

Advanced Edge Caching Strategies

- Stale-while-revalidate: Serve stale content while updating in background

- Edge key-value stores: Utilize platforms like Cloudflare KV or Vercel Edge Config

- Personalized caching: Segment cache by user attributes without sacrificing performance

- Cache API responses: Store backend responses at the edge for faster retrieval

- Predictive prefetching: Anticipate user needs and preload resources

Challenges and Solutions

| Challenge | Solution | Platform Feature |

|---|---|---|

| Limited Execution Time | Break complex operations into chained functions | Vercel Edge Streaming |

| State Management | Use edge-optimized storage solutions | Cloudflare Workers KV |

| Vendor Lock-in | Adopt WebAssembly-based approaches | WASI-standard implementations |

| Debugging Complexity | Implement comprehensive logging | Vercel Edge Logs |

| Cold Starts | Use platform-specific optimization techniques | Cloudflare Smart Placement |

Future of Edge-Serverless Integration

Emerging trends for 2025-2026:

- AI at the edge: Running lightweight ML models directly in edge functions

- WebAssembly dominance: Cross-platform execution via WASI standard

- Distributed databases: Edge-native databases like DynamoDB Accelerator

- Enhanced developer tools: Visual debugging and performance tracing

- 5G integration: Direct edge function execution on mobile edge networks

Further Reading

- Optimizing CDNs with Serverless Hosting

- Real-Time Data in Serverless Applications

- Edge Computing Trends 2025

- Serverless Performance Optimization

- Server-Side Rendering at the Edge

`;

const blob = new Blob([fullHTML], {type: 'text/html'}); const url = URL.createObjectURL(blob);

document.querySelector('.download-btn').href = url;

});